Chaeyun JangI am a PhD student in the Statistical Inference and Machine Learning Lab at the Graduate School of AI, Korea Advanced Institute of Science and Technology (KAIST), advised by Juho Lee. My research focuses on developing methods for uncertainty calibration in large language models (LLMs), with the goal of improving their reliability and trustworthiness in real-world decision-making tasks. I received my MS in AI from KAIST in 2025, and my BS in Statistics and Computer Science from Sungkyunkwan University (SKKU) in 2023.

Email: jcy9911[at]kaist.ac.kr / GitHub / Google Scholar / |

|

PublicationsI'm interested in large language models, uncertainty calibration, bayesian machine learning, and trustworthy AI. |

|

Reliable Decision‑Making via Calibration‑Oriented Retrieval‑Augmented GenerationChaeyun Jang, Deukhwan Cho, Seanie Lee, Hyungi Lee, Juho Lee NeurIPS, 2025 code / website / Recent work introduces CalibRAG, a retrieval-augmented generation method designed to ensure that decisions informed by LLMs are well calibrated, using forecasting functions to assess decision correctness. |

|

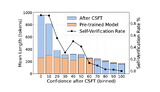

Verbalized Confidence Triggers Self-Verification: Emergent Behavior Without Explicit Reasoning SupervisionChaeyun Jang, Moonseok Choi, Yegon Kim, Hyungi Lee, Juho Lee ICML R2-FM Workshop, 2025 arxiv / website / We show that fine-tuning LLMs with only scalar confidence labels elicits self-verification behavior without explicit reasoning supervision. Our method improves calibration and accuracy on GSM8K, MATH-500, and ARC-Challenge, while enhancing interpretability by aligning reasoning with confidence. |

|

Dimension Agnostic Neural ProcessesHyungi Lee, Chaeyun Jang, Dongbok Lee, Juho Lee ICLR, 2025 arxiv / website / Dimension Agnostic Neural Processes introduces a new meta-learning framework that can handle diverse input and output dimensions via a Dimension Aggregator Block, transforming features into fixed-dimensional representations. |

|

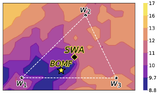

Model Fusion through Bayesian Optimization in Language Model Fine-TuningChaeyun Jang, Hyungi Lee, Jungtaek Kim, Juho Lee NeurIPS (Spotlight), 2024 arxiv / code / website / We introduce a novel model fusion technique, BOMF, that optimizes both the desired metric and loss via multi-objective Bayesian optimization, guided by a two-stage hyperparameter search. |

Invited Talks

|

Teaching Assistant

|

Conference Reviewer

|

Honors & Awards

|